TACC Symposium

21 Sep 2018 Conference TACC HPC ML AI MPITACC Symposium at J.J Pickle Research Campus, Austin TX.

Day 1 (9/20/2018)

13:30~14:30 BoF: Machine Learning

BoF: Birds-of-a-Feather, informal discussion

This session discusses all kinds of questions regarding Machine Learning (ML), Neural Network (NN), Artificial Intelligence (AI). Here are some of the questions discussed.

Why my neural network gives low accuracy? What can I do to improve it?

- Check whether the training data are sufficient;

- Consider more capable model (different NN fits different problems, you need analysis before picking the model);

- Longer training time.

How is the machine learning model different from computational models in physics, chemistry, and others?

Could we integrate the neural network into those physical,

chemical models and facilitate scientific computations, if so how?

ML model is non-linear,

so theoretically it is capable to solve all kinds of problems.

Before applying ML to your domain,

check existing approaches first like linear regression.

Then compare their results with machine learning,

and see if ML can achieve better results.

About dataset:

- You would better analysis the dataset first, making them well-labeled. It is hard for unsupervised learning to achieve ideal results;

- Synthetic dataset for training is a going-on study: when the sources to gather real data for training is limited, we generate synthetic data for training, then validate the training with the real data.

In which area ML has not achieved success yet and could people apply ML later?

- Two main reasons contribute to the recent success of ML. They are the advancement of computation power and abundant dataset. (This is exactly the same as Zhuoran told me). Because the computational resources by all means are ready there, the areas not using ML are those have not get the dataset ready. For example, you can hire people on Amazon to label images, because human being has enough local vision to recognize the pictures, but you can not do it for molecules, proteins, stars, which need professional knowledge base. Once the dataset in those fields are collected, ML may succeed as well.

- Sythetic animation is something ML trying to do.

14:50~15:20 MRI and The Role of TACC’s High Performance Computational Facility: UT-H’s Perspective

The presenter, Ponnada Narayana, is from UTHealth, and is working on detecting brain diseases by processing Magnetic Resonance Imaging (MRI) images of brains. TACC helps in the image classification process.

15:20~15:50 Mechanistic Studies on Palladium(0)-Catalyzed Cyclization of Diketoesters

The presenter, Tulay A. Atesin, if from UT at Rio Grande Valley. She uses TACC to synthesis and simulate the molecules, with a focus on the chiral molecules, where the mirror-symmetric structure could increase the number of candidates to explore.

15:50~16:30 TACC Tour

It is a tour to TACC’s server room.

Hikari 光 means light in Japanese. This machine is designed for energy efficiency. For example, there is no pump for its water cooling system, but the coolant is able to circulate by absorbing the heat.

Stockyard is a vast file system, connected to many other servers.

We walked through the tracks of Stampede2 (but it has more than those two column of tracks). There are cable trees (in blue) from the top going down to connect all the blade servers, and infiniband swtiches are embeded somewhere between the tracks.

It is a machine totally soaked in mineral oil. No circular system to spread the heat, but it is observable that there are swirls around the active machine. It is said to be the vision of the new cluster Frontera, which would be designed to work under oil as well.

16:30~17:10 Poster Session

Talked to a researcher working on optimal placement of charging station for electrical vehicles. They collected huge trip data and built several matrices, for example, one start location can be one row, one destination can be on column, one individual can be one row, one type of trip (business, personal, traval etc.) can be one column. They collected information for electrical vehicles as well, for example, how much percentage of vehicles can last for how many miles. Finally, they use Matlab to find the optimal solution, which is able to cover about 90% trips, and compared with existing electrical vehicle charge station. The interesting thing is it took 3 hours for the Matlab to load their data, but the optimization finished by 50 seconds.

Day 2 (9/21/2018)

8:00~9:00 Tutorial: Getting Start at TACC

This session introduces some administrative processes to use TACC, such as creating accounts, creating projects, requesting allocation. The slides is available online.

For students graduate and leave UT, can they keep using the account created with UT EID?

After UT students graduate, the UT EID account can be kept,

but need to update the information with the new institution they are going.

Requesting an account with UT EID has no internal review process.

There are Instructional and Educational projects for short-term training, and semester-long education.

Reporting any types of related publications helps accelerating the review of allocation request.

How long is the cycle for a second factor authorization?

For one transaction.

Second factor authorization is for all resources except for the portal.

9:00~9:30 Collaborative text mining and Twitter dialectology

The presenter, Lars Hinrichs, if from Texas English Linguistic Lab (TELL).

Their study is based on Twitter tweet records, with respect to regional divergence in language using. They create different word vectors for varients from different regions, calculate the “distance” and analyse to understand the reason.

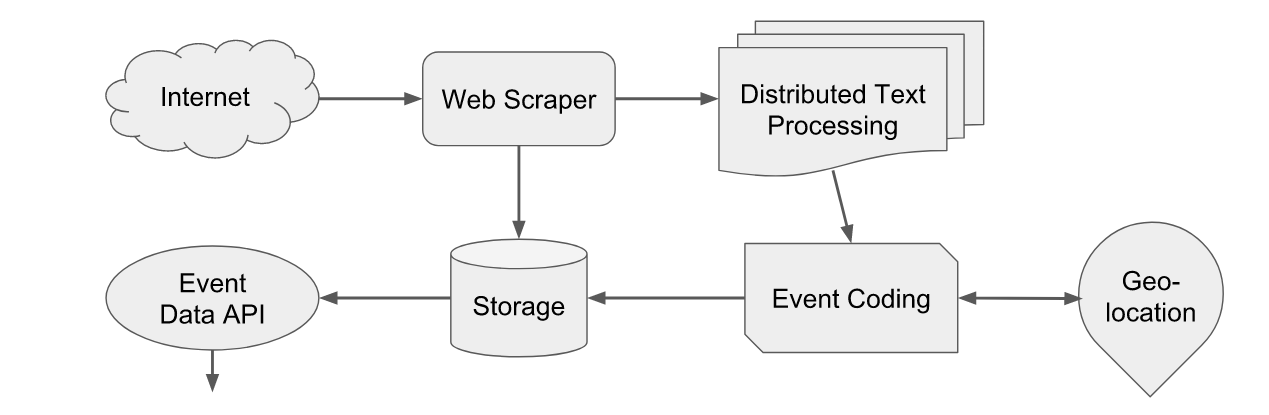

9:30~10:00 How we scaled our big data social science project with XSEDE/TACC

The presenter is from UT Dallas. One of their projects is to apply Natural Language Processing (NLP) on news reports, so that they can extract the event data based on semantics. Due to the high sampling frequency, the data size increases fastly. Besides they also want to provide those data publicly via a convienient and user-friendly way. Given this, the Big Data Cluster in UT Dallas cannot host their huge data well, so they try XSEDE/Jetstream. Their system architecture is like the following chart.

Inter-module Communication is supported by Apache Kafa + Zookeeper

Their data is now available online.

Complexity and advantage of applying NLP on the event coding?

Dictionary translation takes a lot of human work.

How is the validataion process going on?

- Query and see whether get expected data.

- Make prediction and see whether it is aligned with what others would say in the real world.

What is the challenge in Geo-coding, why geo-parsing is difficult?

- Repeated place names.

- Names in other languages.

10:20~10:50 Using TACC Resources in the Advent of the Big Data Era of Observational Astronomy

The presenter, Cynthia Froning, is from McDonald Observatory. There are data-intensive applications in their work. For example, WFIRST, the successor for Hubble Telescope, generates images at a speed of 11TB/day, and the Outer world Brokers (give the right data to the right audiences) needs support from TACC. They use Converlutional Neural Network (CNN) to recognize planets hiden in the images. For computing, one field takes 3 hours using 78 cpus.

10:50~11:20 Supersonic Film Cooling in Rocket Engine Nozzles

The presenter, David McKinnon, is from Firefly company, studying the thermal fluid for the rocket engine cooling.

Their model has Computational Fluid Dynamic (CFD), which involves a lot of computations and needs support from TACC.

11:20~12:20 Poster session

Talked to a physician developing programs to solve 3-body quantum problem, where matrix vector multiplication are conducted on a size of 1 trillion elements per row.

Discussed the runtime of ML workloads with some TACC users. One of them uses CNN for heart disease detection, where the data input is merely 1-D signal, so the training can finish within several hours. Their problem is keeping the ssh session alive. Two solusions:

- Run a simple ping task to guarantee periodic communication. It is a similar approach I used at ORNL.

- Schedule task by submitting script, which is the right way to use TACC.

While for even longer tasks link MLPerf, it exceeds the time limitation, 48 hours, on TACC, so other solutions are needed. They suggested keep timing to figure out the most time-consuming function then optimize it, which I think is not proper for a benchmark. Another idea they mentioned is checkpoint, and restart from where stopped could give a try.

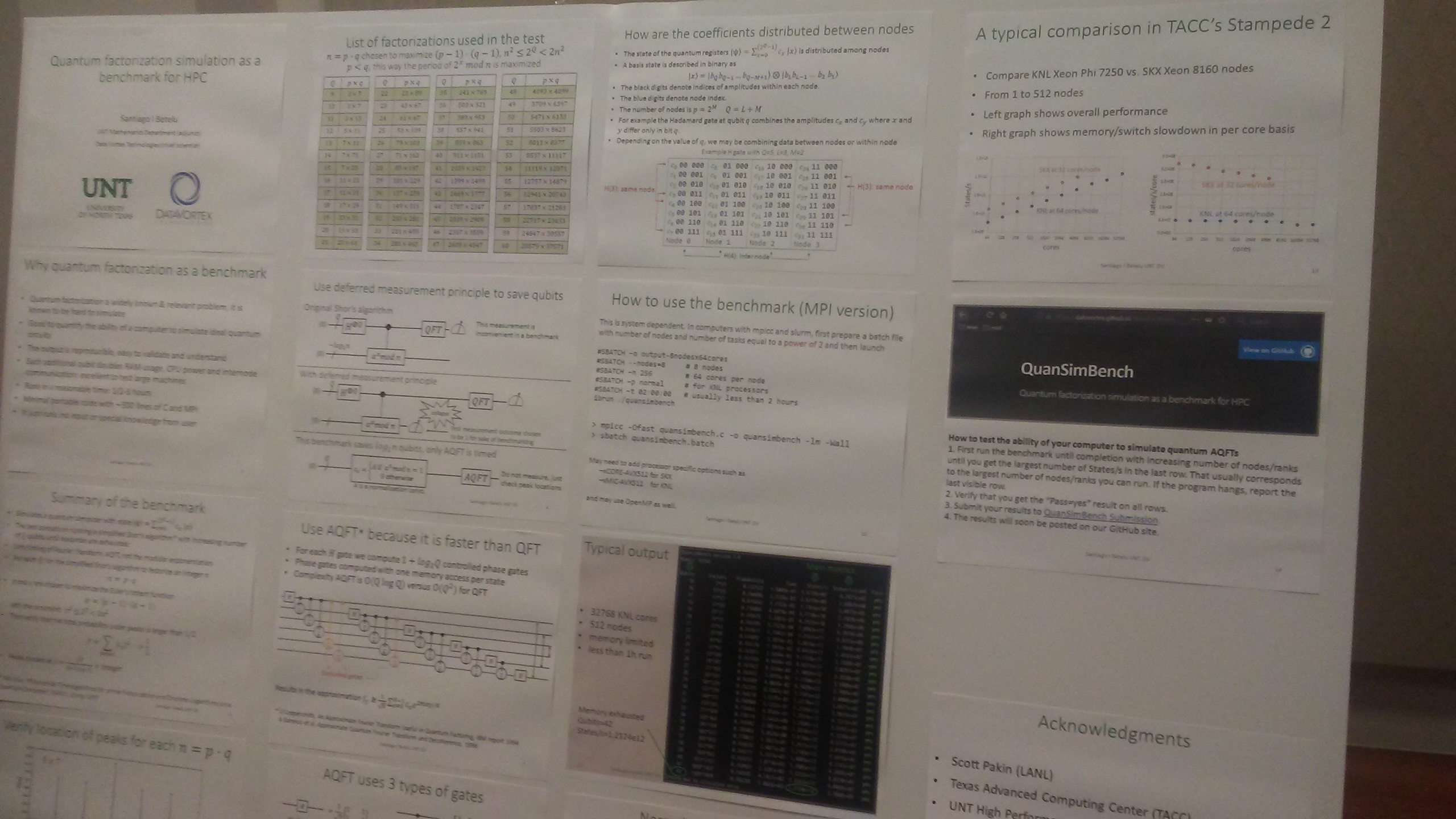

Talked to a researcher from University of North Texas (UNT). He wrote a simulator in ~300 lines of ANSI C for quantum computer, and claim the simulation program a good benchmark to test supercomputers. The algorithm running on the simulated quantum computer is Shor’s algorhtim. The critical part, Quantum Fourier Transform (QFT), gets simplified. He call it Approximite Quantum Fourier Transform (AQFT), whose accuracy and noise resistance are adjustable by changing the number of gates used in the quantum computer. The main metrics are maxiumum q-bit can be simulated (which is actually obvious given the capacity of memory, I think), and simulated state per second.

Another poster presenter introduced how he uses suffix array kernel smoothing on gene sequence. It sounds like pattern detection, may be worth trying on program phase behavior detection as well.

13:20~14:20 Panel with TACC Experts

Seven TACC experts from different groups:

- Cloud and Interactive Computing

- High Performance Computing (HPC)

- Visualization

- Web and Application Interface

- Life Science

- Decision

- Storage and Data Management

Resources for Virtualization Teaching?

The experts mentioned two, the later one is Jetstream.

Data share/protection with outside institution?

There are certain control and protection.

TACC also offer database.

Agave API? How to use it? It has docker and can simply run containers?

Sounds like a convinient interface for web access?

It is worth to check out whether it supports virtual machine and can deploy machine learning application quickly.

For HPC group, how do you make performance prediction?

There are specific numbers in the proposal for hardware not existed yet, kind of magic.

Those are from talking to vendors, and analysis on users’ applications.

And the prediction results are good for three out of five cases.

14:20~16:00 Tutorial: Machine Learning

Tutor: Weijia Xu

A computer program is said to learn from experience E with respect to some classes of tasks T and performance measure P, if its performance at the tasks improves with the experiences. – Mitchell 1997

Three large schools of ML methods:

- Supervised Learning: learn to get a predict model based on associativity between feature vectors and labels;

- Unsupervised Learning: learn to find the category structure among data;

- Deep Learning: learn to extract feature vectors as well, w/ or w/o supervision;

Kernels function: Mapping the original feature space to some higher-dimensional feature space where the training set is separable.

The kernels function extends Support Vector Machine (SVM) to non-linear SVM.

How to define the improvement of performance?

If get better data, better algorithm, get higher performance (I heard IPC? Execution time?).

Support for ML on TACC:

- Software:

Scikit-learn, a Python package for machine learning. A good thing for Scikit-learn is it has a cheat sheet to categorize its tools into different use cases.

Spark and MLlib here to help scaling. Spark is a scalable data processing framework, give a shortcut to memory limiation issue. It has Python interface, is PySpark. MLlib is a library implementing a common set of machine learning algorithms.

Tensorflow and Caffee are supported due to their big user base, and PyTorch is on the way. Tenserflow is low-level optimized with CUDA/C++, but has both C++ and Python frontend.

Horovod is a distributed version of Tensorflow. Horovod has a smarter and more efficient synchronize strategy.

Keras has a unified interface to ease the programming effort. Less coding, but basically no performance loss, because it would be translated into other lower level codes. - Hardware:

Wrangler is a 96 node cluster with flash based storage subsystem to support big data processing, can provide the best support on Hadoop and Spark based workflow.

Maverick has 132 K40 GPU cluster, is the only public resources equipped with GPUs. - Interfaces:

As normal tasks:- idev

- Sbatch

With GUI (Visualization Portal):

- Through VNC session

- Jupyter notebook

- Zeppelin notebook

- Rstudio

A going-on research project is Smart City – Traffic Video Analysis collaborated with Capital Metro.

J.J Pickle Research Campus

Conslusion

The TACC Symposium is not exactly the same as what I expected. The tutorials are quite fundamental to help scientists start using TACC. However, it is an essential goal for HPC to provide user-friendly interfaces, and it is also encouraging to see HPC facilitates so many different fields.