TLC: Tag-Less Cache

30 Jan 2018 Comparch Seminar HW Cache TLB ideas MicroarchitectureDavid Black-Schaffer from Uppsala Univerity made an impressing presentation about their TLC (Tag-Less Cache) work.

TLC is a novel cache hierarchy without tag array. Instead, it stores metadata separately to avoid unnecessary cache access.

Motivation

Tags rarely evloved through decades

In the past decades, architecture of processors changed a lot.

From single core to multicore, from in-order to out-of-order, from scaler to superscaler…

And many complicated components are added, like GPU, co-processor, accelerator…

While tag array is always indispensable in cache design.

Tags and data are fused.

The information stored in the tag array are like valid bit, dirty bit, part of address, bits for replacement. They are not data for CPU to compute, but offer extra information to controller to index and manage data, so called metadata.

Searching tag array to match consumes time and energy

Tag was introduced to make up the difference between the capacity of cache and main memory.

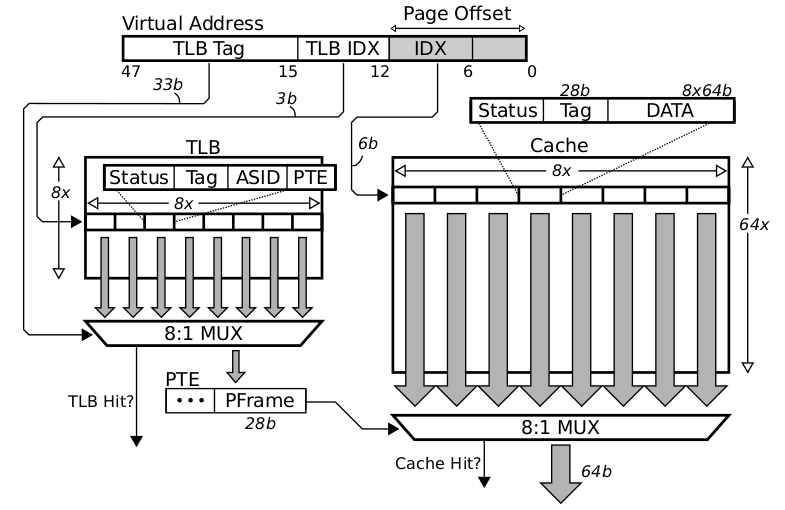

Conventional cache use part of address as offset, part of address as index and the left part as tag:

| Tag | Index | Offset |

To make sure the data cached in this line exactly is the data we are looking for, we need to compare the tag part in address with the tag associated to the cache line.

This becomes more costly when the associativity increases, because we need to traverse through each way doing the comparison.

Moreover, as more levels of caches are incorporated into processor, such comparison is repeated on every level!

Worse is, in multicore processor, you might be told when reaching the shared LLC (Last Level Cache), that the data is cached in another core’s private cache, where you need to search to get the latest data!!

Finally it becomes annoying that the data is in flight: you might fail to find the data after searching, because during the process it is written back from that core’s buffer!!!

Brief Introduction of TLC Implementation

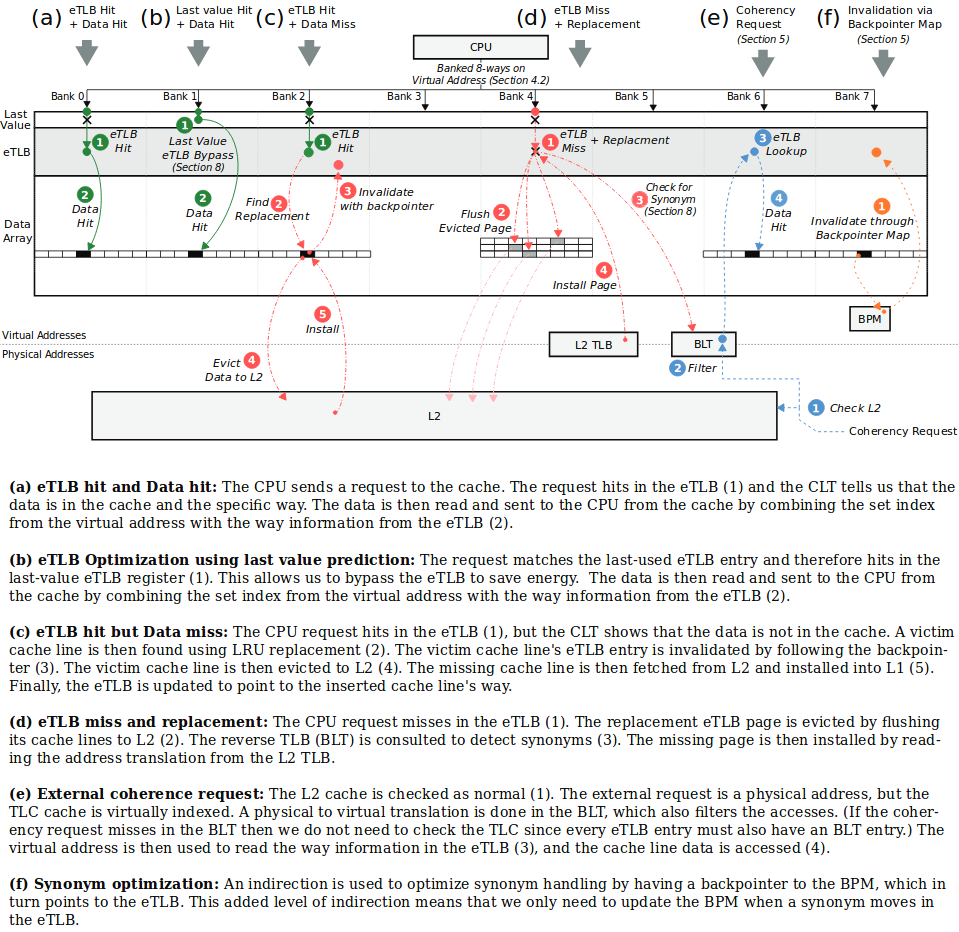

To avoid wasting time on searching, TLC seperates metadata from data, and stores them in new structure like pointer. The idea is the metadata could explictly point out the location of data, so that CPU can fetch data directly without searching.

TLC for L1 dcache

Their first attempt is on L1 data cache, and is published on MICRO’46.

They choose to extend TLB (Translation Lookup Buffer) to hold the metadata, since translation is necessary for cache access, except for VIVT (Virtual Index Virtual Tag) cache.

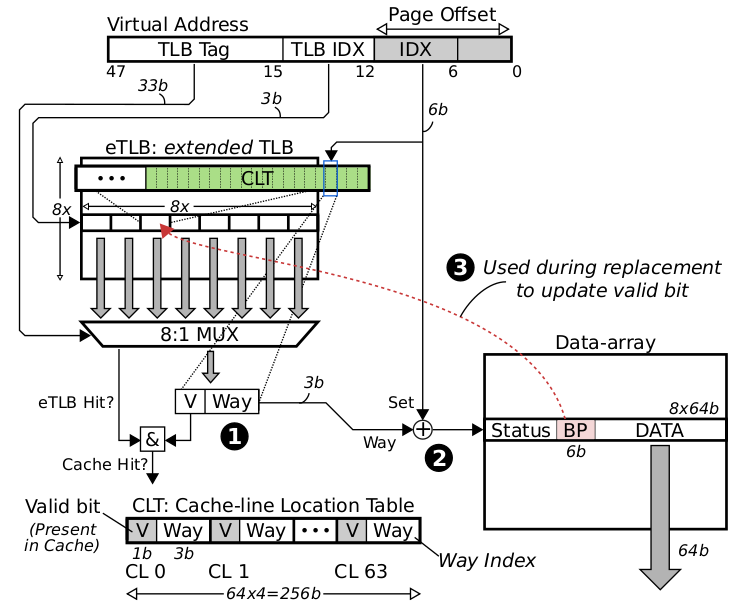

Because TLB is page granularity while metadata is cacheline, so they append a series of bits called CLT (Cache-line Location Table) after each TLB entry, to indicate the location of each cache block belong to this page.

They call this eTLB (extended TLB).

One bit for valid, three bits to select a way (they are comparing with a 8-way VIPT cache).

This obviously requests extra storage, but as exchange tag array is removed.

So the location of data comes together with phsical address.

According to their design, there are two cases raising a cache miss:

- the valid bit for the cache line is not set;

- eTLB miss;

So eTLB is required to cover L1 dcache. But as mentioned before, TLB is page granularity, so the coverage should large enough to satisfy such requirement easily.

TLC for multi-level cache

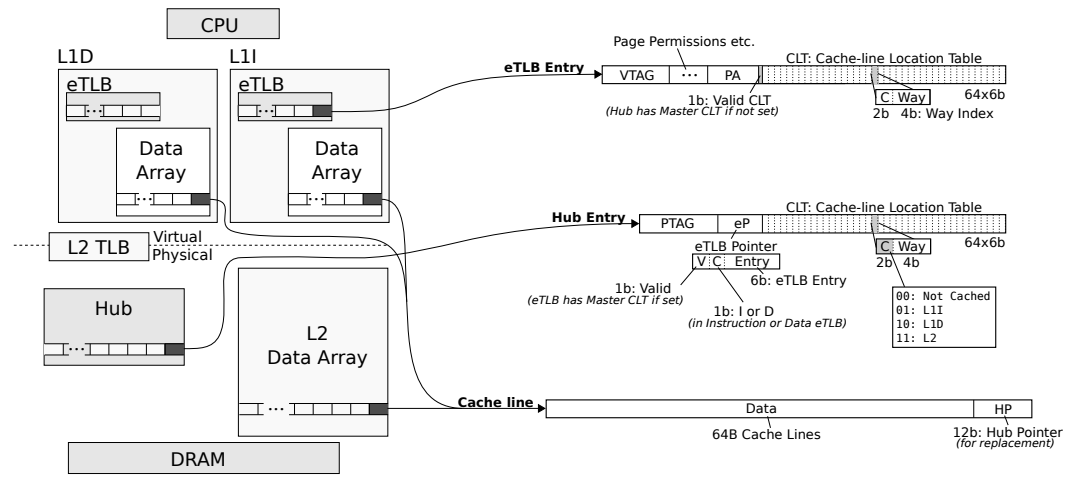

They later extended TLC to D2D (Direct-to-Data) on multi-level cache hierarchy, and is published on ISCA’41.

In addition to the bits in CLT indicating with way does the data lie, they extended the valid bit to two bits, so it is able to point to cache line across levels.

And eTLB becomes multi-level of course.

TLC is more powerful in multi-level caches, because one lookup in eTLB tells more information about the location of data now.

TLC for multicore processor

Their recently achievement is extending D2D to D2M (Direct-to-Master), a NUCA (Non-Uniform Cache Access) policy on multicore processors, and is published on HPCA’23. So it is able to point to data cached in another node now.

Benefits

✓ Reduce unneccary cache tag comparisons

✓ Improve cache performance

✓ Energy saving

✓ Break conventional cache hierarchy

✓ Introduce flexibility, further create chances for new optimization

✘ Potential effect on cache replacement efficiency

✘ Need new strategy to handle issues like Homonyms and Synonyms

✘ The control logic becomes complicate

Credits

- David Black-Schaffer - I hope one day I can become a good presenter like you, with so fluent English, making such a vivid presentation, spreading innovative ideas.

- A split cache hierarchy for enabling data-oriented optimizations - D2M paper.

- Data placement across the cache hierarchy: Minimizing data movement with reuse-aware placement - Cache replacement optimization based on TLC.

- The Direct-to-Data (D2D) Cache: Navigating the cache hierarchy with a single lookup - D2D paper.

- TLC: A tag-less cache for reducing dynamic first level cache energy - TLC paper.

- Virtual Cache Issues - Introduction on synonyms and homonyms.